Defining the general Architectures for 3D Applications

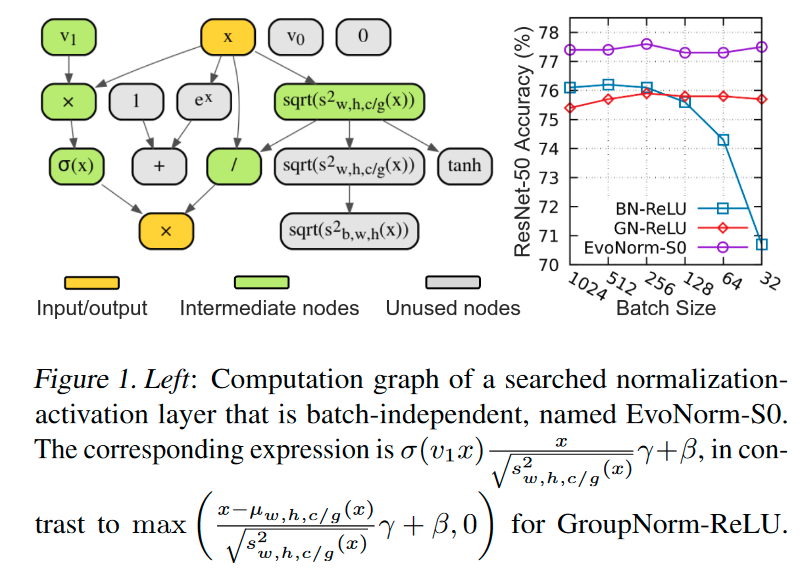

Evolving Normalization-Activation Layers

https://arxiv.org/pdf/2004.02967.pdf inspired by : https://github.com/digantamisra98/EvoNorm

quant = Quantize(64, 100)

input = torch.randn(16, 16, 8, 64)

quantize, diff, embed_ind = quant(input)

print(quantize.shape, diff, embed_ind.shape)

m = ConvBn(1, 16, convsize=4, stride=(2, 2, 2), padding=(1, 1, 1))

inp = torch.randn(20, 1, 16, 64, 64)

output = m(inp)

print(output.shape)

m = ConvTpBn(1, 16, convsize=3, stride=(2, 2, 2), padding=(1, 1, 1))

output = m(inp)

print(output.shape)